Network Security Concepts and Policies

In this chapter, you learn about the following topics:

Fundamental

concepts in network security, including identification of common

vulnerabilities and threats, and mitigation strategies

Implementation

of a security architecture using a lifecycle approach, including the

phases of the process, their dependencies, and the importance of a sound

security policy

The open nature of the Internet makes it vital

for businesses to pay attention to the security of their networks. As

companies move more of their business functions to the public network,

they need to take precautions to ensure that the data cannot be

compromised and that the data is not accessible to anyone who is not

authorized to see it.

Unauthorized network access by an

outside hacker or a disgruntled employee can cause damage or

destruction to proprietary data, negatively affect company productivity,

and impede the capability to compete. The Computer Security Institute

reported in its 2010/2011 CSI Computer Crime and Security Survey

(available at http://gocsi.com/survey) that on an average day, 41.1

percent of respondents dealt with at least one security incident (see

page 11 of the survey). Unauthorized network access can also harm

relationships with customers and business partners, who might question

the capability of a company to protect its confidential information. The

definition of “data location” is being blurred by cloud computing

services and other service trends. Individuals and corporations benefit

from the elastic deployment of services in the cloud, available at all

times from any device, but these dramatic changes in the business

services industry exacerbate the risks in protecting data and the

entities using it (individuals, businesses, governments, and so on).

Security policies and architectures require sound principles and a

lifecycle approach, including whether the data is in the server farm,

mobile on the employee’s laptop, or stored in the cloud.

To

start on our network security quest, this chapter examines the need for

security, looks at what you are trying to protect, and examines the

different trends for attacks and protection and the principles of secure

network design. These concepts are important not only for succeeding

with the IINS 640-554 exam, but they are fundamentals at all security

endeavors on which you will be embarking.

Building Blocks of Information Security

Establishing

and maintaining a secure computing environment is increasingly more

difficult as networks become increasingly interconnected and data flows

ever more freely. In the commercial world, connectivity is no longer

optional, and the possible risks of connectivity do not outweigh the

benefits. Therefore, it is very important to enable networks to support

security services that provide adequate protection to companies that

conduct business in a relatively open environment. This section explains

the breadth of assumptions and challenges to establish and maintain a

secure network environment.

Basic Security Assumptions

Several new assumptions have to be made about computer networks because of their evolution over the years:

- Modern networks are very large, very interconnected, and run both ubiquitous protocols (such as IP) and proprietary protocols. Therefore, they are often open to access, and a potential attacker can with relative ease attach to, or remotely access, such networks. Widespread IP internetworking increases the probability that more attacks will be carried out over large, heavily interconnected networks, such as the Internet.

- Computer systems and applications that are attached to these networks are becoming increasingly complex. In terms of security, it becomes more difficult to analyze, secure, and properly test the security of the computer systems and applications; it is even more so when virtualization is involved. When these systems and their applications are attached to large networks, the risk to computing dramatically increases.

Basic Security Requirements

To

provide adequate protection of network resources, the procedures and

technologies that you deploy need to guarantee three things, sometimes

referred to as the CIA triad:

- Confidentiality: Providing confidentiality of data guarantees that only authorized users can view sensitive information.

- Integrity: Providing integrity of data guarantees that only authorized users can change sensitive information and provides a way to detect whether data has been tampered with during transmission; this might also guarantee the authenticity of data.

- Availability of systems and data: System and data availability provides uninterrupted access by authorized users to important computing resources and data.

When designing network security, a designer must be aware of the following:

- The threats (possible attacks) that could compromise security

- The associated risks of the threats (that is, how relevant those threats are for a particular system)

- The cost to implement the proper security countermeasures for a threat

- A cost versus benefit analysis to determine whether it is worthwhile to implement the security countermeasures

Data, Vulnerabilities, and Countermeasures

Although

viruses, worms, and hackers monopolize the headlines about information

security, risk management is the most important aspect of security

architecture for administrators. A less exciting and glamorous area,

risk management is based on specific principles and concepts that are

related to asset protection and security management.

An

asset is anything of value to an organization. By knowing which assets

you are trying to protect, as well as their value, location, and

exposure, you can more effectively determine the time, effort, and money

to spend in securing those assets.

A vulnerability is a

weakness in a system or its design that could be exploited by a threat.

Vulnerabilities are sometimes found in the protocols themselves, as in

the case of some security weaknesses in TCP/IP. Often, the

vulnerabilities are in the operating systems and applications.

Written

security policies might also be a source of vulnerabilities. This is

the case when written policies are too lax or are not thorough enough in

providing a specific approach or line of conduct to network

administrators and users.

A threat is any potential

danger to assets. A threat is realized when someone or something

identifies a specific vulnerability and exploits it, creating exposure.

If the vulnerability exists theoretically but has not yet been

exploited, the threat is considered latent. The entity that takes

advantage of the vulnerability is known as the threat agent or threat

vector.

A risk is the likelihood that a particular

threat using a specific attack will exploit a particular vulnerability

of a system that results in an undesirable consequence. Although the

roof of the data center might be vulnerable to being penetrated by a

falling meteor, for example, the risk is minimal because the likelihood

of that threat being realized is negligible.

NOTE

If you have a vulnerability but there is no threat toward that vulnerability, technically you have no risk Pass4sure 210-260.

An

exploit happens when computer code is developed to take advantage of a

vulnerability. For example, suppose that a vulnerability exists in a

piece of software, but nobody knows about this vulnerability. Although

the vulnerability exists theoretically, there is no exploit yet

developed for it. Because there is no exploit, there really is no

problem yet.

A countermeasure is a safeguard that

mitigates a potential risk. A countermeasure mitigates risk either by

eliminating or reducing the vulnerability or by reducing the likelihood

that a threat agent will be able to exploit the risk.

Key Concepts

- An asset is anything of value to an organization.

- A vulnerability is a weakness in a system or its design that could be exploited by a threat.

- A threat is a potential danger to information or systems.

- A risk is the likelihood that a particular vulnerability will be exploited.

- An exploit is an attack performed against a vulnerability.

- A countermeasure (safeguard) is the protection that mitigates the potential risk.

Data Classification

To

optimally allocate resources and secure assets, it is essential that

some form of data classification exists. By identifying which data has

the most worth, administrators can put their greatest effort toward

securing that data. Without classification, data custodians find it

almost impossible to adequately secure the data, and IT management finds

it equally difficult to optimally allocate resources.

Sometimes

information classification is a regulatory requirement (required by

law), in which case there might be liability issues that relate to the

proper care of data. By classifying data correctly, data custodians can

apply the appropriate confidentiality, integrity, and availability

controls to adequately secure the data, based on regulatory, liability,

and ethical requirements. When an organization takes classification

seriously, it illustrates to everyone that the company is taking

information security seriously.

The methods and labels

applied to data differ all around the world, but some patterns do

emerge. The following is a common way to classify data that many

government organizations, including the military, use:

- Unclassified: Data that has little or no confidentiality, integrity, or availability requirements and therefore little effort is made to secure it.

- Restricted: Data that if leaked could have undesirable effects on the organization. This classification is common among NATO (North Atlantic Treaty Organization) countries but is not used by all nations.

- Confidential: Data that must comply with confidentiality requirements. This is the lowest level of classified data in this scheme.

- Secret: Data for which you take significant effort to keep secure because its disclosure could lead to serious damage. The number of individuals who have access to this data is usually considerably fewer than the number of people who are authorized to access confidential data.

- Top secret: Data for which you make great effort and sometimes incur considerable cost to guarantee its secrecy since its disclosure could lead to exceptionally grave damage. Usually a small number of individuals have access to top-secret data, on condition that there is a need to know.

- Sensitive But Unclassified (SBU): A popular classification by government that designates data that could prove embarrassing if revealed, but no great security breach would occur. SBU is a broad category that also includes the For Official Use Only designation.

It is important to point

out that there is no actual standard for private-sector classification.

Furthermore, different countries tend to have different approaches and

labels. Nevertheless, it can be instructive to examine a common, private

sector classification scheme:

- Public: Companies often display public data in marketing literature or on publicly accessible websites.

- Sensitive: Data in this classification is similar to the SBU classification in the government model. Some embarrassment might occur if this data is revealed, but no serious security breach is involved.

- Private: Private data is important to an organization. You make an effort to maintain the secrecy and accuracy of this data.

- Confidential: Companies make the greatest effort to secure confidential data. Trade secrets and employee personnel files are examples of what a company would commonly classify as confidential.

Regardless of the

classification labeling used, what is certain is that as the security

classification of a document increases, the number of staff that should

have access to that document should decrease, as illustrated in Figure

1-1.

Figure 1-1. Ratio: Staff Access to Information Security Classification

Many factors go into the decision of how to classify certain data. These factors include the following:

- Value: Value is the number one criterion. Not all data has the same value. The home address and medical information of an employee is considerably more sensitive (valuable) than the name of the chief executive officer (CEO) and the main telephone number of the company.

- Age: For many types of data, its importance changes with time. For example, an army general will go to great lengths to restrict access to military secrets. But after the war is over, the information is gradually less and less useful and eventually is declassified.

- Useful life: Often data is valuable for only a set window of time, and after that window has expired, there is no need to keep it classified. An example of this type of data is confidential information about the products of a company. The useful life of the trade secrets of products typically expires when the company no longer sells the product.

- Personal association: Data of this type usually involves something of a personal nature. Much of the government data regarding employees is of this nature. Steps are usually taken to protect this data until the person is deceased.

NOTE

To

further understand the value of information, think about the Federal

Reserve Bank (commonly called the Fed) and the discount rate it sets.

The discount rate is, in essence, the interest rate charged to

commercial banks by the Fed.

Periodically, the Fed

announces a new discount rate. Typically, if the rate is higher than the

previous rate, the stock market reacts with sell-offs. If the discount

rate is lower, the stock market rises.

Therefore,

moments before the Fed announces the new discount rate, that information

is worth gazillions of dollars. However, the value of this information

drops to nothing when it hits the wire, because everyone then has free

access to the information.

For a classification system

to work, there must be different roles that are fulfilled. The most

common of these roles are as follows:

- Owner: The owner is the person who is ultimately responsible for the information, usually a senior-level manager who is in charge of a business unit. The owner classifies the data and usually selects custodians of the data and directs their actions. It is important that the owner periodically review the classified data because the owner is ultimately responsible for the data.

- Custodian: The custodian is usually a member of the IT staff who has the day-to-day responsibility for data maintenance. Because the owner of the data is not required to have technical knowledge, the owner decides the security controls but the custodian marks the data to enforce these security controls. To maintain the availability of the data, the custodian regularly backs up the data and ensures that the backup media is secure. Custodians also periodically review the security settings of the data as part of their maintenance responsibilities.

User: Users bear no responsibility for the

classification of data or even the maintenance of the classified data.

However, users do bear responsibility for using the data in accordance

with established operational procedures so that they maintain the

security of the data while it is in their possession.

Vulnerabilities Classifications

It

is also important to understand the weaknesses in security

countermeasures and operational procedures. This understanding results

in more effective security architectures. When analyzing system

vulnerabilities, it helps to categorize them in classes to better

understand the reasons for their emergence. You can classify the main

vulnerabilities of systems and assets using broad categories:

- Policy flaws

- Design errors

- Protocol weaknesses

- Software vulnerabilities

- Misconfiguration

- Hostile code

- Human factor

This

list mentions just a few of the vulnerability categories. For each of

these categories, multiple vulnerabilities could be listed.

There

are several industry efforts that are aimed at categorizing threats for

the public domain. These are some well-known, publicly available

catalogs that may be used as templates for vulnerability analysis:

- Common Vulnerabilities and Exposures (CVE): A dictionary of publicly known information security vulnerabilities and exposures. It can be found at http://cve.mitre.org/. The database provides common identifiers that enable data exchange between security products, providing a baseline index point for evaluating coverage of tools and services.

- National Vulnerability Database (NVD): The U.S. government repository of standards-based vulnerability management data. This data enables automation of vulnerability management, security measurement, and compliance. NVD includes databases of security checklists, security-related software flaws, misconfigurations, product names, and impact metrics. The database can be found at http://nvd.nist.gov.

- Common Vulnerability Scoring System (CVSS): A standard within the computer and networking fields for assessing and classifying security vulnerabilities. This standard is focused on rating a vulnerability compared to others, thus helping the administrator to set priorities. This standard was adopted by significant players in the industry such as McAfee, Qualys, Tenable, and Cisco. More information can be found, including the database and calculator, at http://www.first.org/cvss.

Countermeasures Classification

After

assets (data) and vulnerabilities, threats are the most important

component to understand. Threat classification and analysis, as part of

the risk management architecture, will be described later in this

chapter.

Once threat vectors are considered,

organizations rely on various controls to accomplish in-depth defense as

part of their security architecture. There are several ways to classify

these security controls; one of them is based on the nature of the

control itself. These controls fall into one of three categories:

- Administrative: Controls that are largely policies and procedures

- Technical: Controls that involve electronics, hardware, software, and so on

- Physical: Controls that are mostly mechanical

- Later in this chapter, we will discuss models and frameworks from different organizations that can be used to implement network security best practices.

Administrative Controls

Administrative

controls are largely policy and procedure driven. You will find many of

the administrative controls that help with an enterprise’s information

security in the human resources department. Some of these controls are

as follows:

- Security-awareness training

- Security policies and standards

- Change controls and configuration controls

- Security audits and tests

- Good hiring practices

- Background checks of contractors and employees

For

example, if an organization has strict hiring practices that require

drug testing and background checks for all employees, the organization

will likely hire fewer individuals of questionable character. With fewer

people of questionable character working for the company, it is likely

that there will be fewer problems with internal security issues. These

controls do not single-handedly secure an enterprise, but they are an

important part of an information security program.

Technical Controls

Technical

controls are extremely important to a good information security

program, and proper configuration and maintenance of these controls will

significantly improve information security. The following are examples

of technical controls:

- Firewalls

- Intrusion prevention systems (IPS)

- Virtual private network (VPN) concentrators and clients

- TACACS+ and RADIUS servers

- One-time password (OTP) solutions

- Smart cards

- Biometric authentication devices

- Network Admission Control (NAC) systems

- Routers with ACLs

NOTE

This

book focuses on technical controls because implementing the Cisco

family of security products is the primary topic. However, it is

important to remember that a comprehensive security program requires

much more than technology.

Physical Controls

While

trying to secure an environment with good technical and administrative

controls, it is also necessary that you lock the doors in the data

center. This is an example of a physical control. Other examples of

physical controls include the following:

- Intruder detection systems

- Security guards

- Locks

- Safes

- Racks

- Uninterruptible power supplies (UPS)

- Fire-suppression systems

- Positive air-flow systems

When

security professionals examine physical security requirements, life

safety (protecting human life) should be their number one concern. Good

planning is needed to balance life safety concerns against security

concerns. For example, permanently barring a door to prevent

unauthorized physical access might prevent individuals from escaping in

the event of a fire. By the way, physical security is a field that Cisco

entered a few years ago. More information on those products can be

found at http://www.cisco.com/go/physicalsecurity.

Convergence of Physical and Technical Security

One

of the best examples of the convergence of physical and technical

security I have witnessed was during a technical visit with a bank in

Doha, Qatar, a few weeks before the grand opening of their new head

office. They had extensive physical security, using a mix of contactless

smart cards and biometrics.

They had cleverly linked

the login system for traders to the physical security system. For

instance, a trader coming to work in the morning had to use his smart

card to enter the building, to activate the turnstile, to call the exact

floor where the elevator was to stop, and to be granted access through

the glass doors of the trading floors. The movements of the traders were

recorded by the physical security systems. Minutes later, upon logging

in to perform the first trade of the day, the trading authentication,

authorization, and accounting (AAA) system queried the physical security

system about the location of the trader. The trader was granted access

to the trading system only when the physical security system confirmed

to the trading AAA system that the trader was physically on the trading

floor.

Controls are also categorized by the type of control they are:

- Preventive: The control prevents access.

- Deterrent: The control deters access.

- Detective: The control detects access.

All

three categories of controls can be any one of the three types of

controls; for example, a preventive control can be administrative,

physical, or technical.

NOTE

A

security control is any mechanism that you put in place to reduce the

risk of compromise of any of the three CIA objectives: confidentiality,

integrity, and availability.

Preventive controls exist

to prevent compromise. This statement is true whether the control is

administrative, technical, or physical. The ultimate purpose for these

controls is to stop security breaches before they happen.

However,

a good security design also prepares for failure, recognizing that

prevention will not always work. Therefore, detective controls are also

part of a comprehensive security program because they enable you to

detect a security breach and to determine how the network was breached.

With this knowledge, you should be able to better secure the data the

next time.

With effective detective controls in place,

the incident response can use the detective controls to figure out what

went wrong, allowing you to immediately make changes to policies to

eliminate a repeat of that same breach. Without detective controls, it

is extremely difficult to determine what you need to change.

Deterrent

controls are designed to scare away a certain percentage of adversaries

to reduce the number of incidents. Cameras in bank lobbies are a good

example of a deterrent control. The cameras most likely deter at least

some potential bank robbers. The cameras also act as a detective

control.

NOTE

To be more concrete, examples of types of physical controls include the following:

- Preventive: Locks on doors

- Deterrent: Video surveillance

- Detective: Motion sensor

NOTE

It

is not always possible to classify a control into only one category or

type. Sometimes there is overlap in the definitions, as in the case of

the previously mentioned bank lobby cameras. They serve as both

deterrent and detective controls.

Need for Network Security

Business

goals and risk analysis drive the need for network security. For a

while, information security was influenced to some extent by fear,

uncertainty, and doubt. Examples of these influences included the fear

of a new worm outbreak, the uncertainty of providing web services, or

doubts that a particular leading-edge security technology would fail.

But we realized that regardless of the security implications, business

needs had to come first.

If your business cannot

function because of security concerns, you have a problem. The security

system design must accommodate the goals of the business, not hinder

them. Therefore, risk management involves answering two key questions:

What does the cost-benefit analysis of your security system tell you?

How will the latest attack techniques play out in your network environment?

Dealing with Risk

There are actually four ways to deal with risk:

Reduce:

This is where we IT managers evolve and it is the main focus of this

book. We are responsible for mitigating the risks. Four activities

contribute to reducing risks:

Limitation/avoidance:

Creating a secure environment by not allowing actions that would cause

risks to occur, such as installing a firewall, using encryption systems

and strong authentication, and so on

Assurance: Ensuring policies, standards, and practices are followed

Detection: Detecting intrusion attempts and taking appropriate action to terminate the intrusion

Recovery: Restoring the system to operational state

Ignore:

This is not an option for an IT manager. The moment you become aware of

a risk, you must acknowledge that risk and decide how to deal with it:

accept this risk, transfer this risk, or reduce this risk.

Accept:

This means that you document that there is a risk, but take no action

to mitigate that risk because the risk is too far-fetched or the

mitigation costs are too prohibitive.

Transfer: This is buying insurance against a risk that cannot be eliminated or reduced further.

Figure 1-2 illustrates the key factors you should consider when designing a secure network:

Business needs: What does your organization want to do with the network?

Risk analysis: What is the risk and cost balance?

Security policy: What are the policies, standards, and guidelines that you need to address business needs and risks?

Industry best practices: What are the reliable, well-understood, and recommended security best practices?

Security

operations: These operations include incident response, monitoring,

maintenance, and auditing the system for compliance.

Figure 1-2

Figure 1-2. Factors Affecting the Design of a Secure Network

Risk management and security policies will be detailed later in this chapter.

Intent Evolution

When

viewed from the perspective of motivation intersecting with

opportunity, risk management can be driven not only by the techniques or

sophistication of the attackers and threat vectors, but also by their

motives. Research reveals that hackers are increasingly motivated by

profit, where in the past they were motivated by notoriety and fame. In

instances of attacks carried out for financial gains, hackers are not

looking for attention, which makes their exploits harder to detect. Few

signatures exist or will ever be written to capture these “custom”

threats. In order to be successful in defending your environments, you

must employ a new model to catch threats across the infrastructure.

Attackers

are also motivated by government or industrial espionage. The Stuxnet

worm, whose earliest versions appear to date to 2009, is an example.

This worm differs from its malware “cousins” in that it has a specific,

damaging goal: to traverse industrial control systems, such as

supervisory control and data acquisition (SCADA) systems, so that it can

reprogram the programmable logic controllers, possibly disrupting

industrial operations.

This worm was not created to

gather credit card numbers to sell off to the highest bidder, or to sell

fake pharmaceuticals. This worm appears to have been created solely to

invade public or private infrastructure. The cleverness of Stuxnet lies

in its ability to traverse non-networked systems, which means that even

systems unconnected to networks or the Internet are at risk.

Security

experts have called Stuxnet “the smartest malware ever.” This worm

breaks the malware mold because it is designed to disrupt industrial

control systems in critical infrastructure. This ability should be a

concern for every government.

Motivation can also so be

political or in the form of vigilantism. Anonymous is currently the

best known hacktivist group. As a recent example of its activities, in

May 2012, Anonymous attacked the website of the Quebec government after

its promulgation of a law imposing new requirements for the right to

protest by college and university students.

Threat Evolution

The

nature and sophistication of threats, as well as their pervasiveness

and global nature, are trends to watch.Figure 1-3 shows how the threats

that organizations face have evolved over the past few decades, and how

the growth rate of vulnerabilities that are reported in operating

systems and applications is rising. The number and variety of viruses

and worms that have appeared over the past three years is daunting, and

their rate of propagation is frightening. There have been unacceptable

levels of business outages and expensive remediation projects that

consume staff, time, and funds that were not originally budgeted for

such tasks.

Figure 1-3

Figure 1-3. Shrinking Time Frame from Knowledge of Vulnerability to Release of Exploits

New

exploits are designed to have global impact in minutes. Blended

threats, which use multiple means of propagation, are more sophisticated

than ever. The trends are becoming regional and global in nature. Early

attacks affected single systems or one organization network, while

attacks that are more recent are affecting entire regions. For example,

attacks have expanded from individual denial of service (DoS) attacks

from a single attacker against a single target, to large-scale

distributed DoS (DDoS) attacks emanating from networks of compromised

systems that are known as botnets.

Threats are also

becoming persistent. After an attack starts, attacks may appear in waves

as infected systems join the network. Because infections are so complex

and have so many end users (employees, vendors, and contractors),

multiple types of endpoints (company desktop, home, and server), and

multiple types of access (wired, wireless, VPN, and dial-up), infections

are difficult to eradicate.

More recent threat vectors

are increasingly sophisticated, and the motivation of the attackers is

reflected in their impact. Recent threat vectors include the following:

- Cognitive threats via social networks (likejacking): Social engineering takes a new meaning in the era of social networking. From phishing attacks that target social network accounts of high-profile individuals, to information exposure due to lack of policy, social networks have become a target of choice for malicious attackers.

- PDA and consumer electronics exploits: The operating systems on consumer devices (smartphones, PDAs, and so on) are an option of choice for high-volume attacks. The proliferation of applications for these operating systems, and the nature of the development and certification processes for those applications, augments the problem.

- Widespread website compromises: Malicious attackers compromise popular websites, making the sites download malware to connecting users. Attackers typically are not interested in the data on the website, but use it as a springboard to infect the users of the site.

- Disruption of critical infrastructure: The Stuxnet malware, which exploits holes in Windows systems and targets a specific Siemens supervisory control and data acquisition (SCADA) program with sabotage, confirmed concerns about an increase in targeted attacks aimed at the power grid, nuclear plants, and other critical infrastructure.

- Virtualization exploits: Device and service virtualization add more complexity to the network. Attackers know this and are increasingly targeting virtual servers, virtual switches, and trust relationships at the hypervisor level.

- Memory scraping: Increasingly popular, this technique is aimed at fetching information directly from volatile memory. The attack tries to exploit operating systems and applications that leave traces of data in memory. Attacks are particularly aimed at encrypted information that may be processed as unencrypted in volatile memory.

- Hardware hacking: These attacks are aimed at exploiting the hardware architecture of specific devices, with consumer devices being increasingly popular. Attack methods include bus sniffing, altering firmware, and memory dumping to find crypto keys.

- IPv6-based attacks: These attacks could become more pervasive as the migration to IPv6 becomes widespread. Attackers are focusing initially on covert channels through various tunneling techniques, and man-in-the middle attacks leverage IPv6 to exploit IPv4 in dual-stack deployments.

Trends Affecting Network Security

Other

trends in business, technology, and innovation influence the need for

new paradigms in information security. Mobility is one trend. Expect to

see billions of new network mobile devices moving into the enterprise

worldwide over the next few years. Taking into consideration constant

reductions and streamlining in IT budgets, organizations face serious

challenges in supporting a growing number of mobile devices at a time

when their resources are being reduced.

The second

market transition is cloud computing and cloud services. Organizations

of all kinds are taking advantage of offerings such as Software as a

Service (SaaS) and Infrastructure as a Service (IaaS) to reduce costs

and simplify the deployment of new services and applications.

These

cloud services add challenges in visibility (how do you identify and

mitigate threats that come to and from a trusted network?), control (who

controls the physical assets, encryption keys, and so on?), and trust

(do you trust cloud partners to ensure that critical application data is

still protected when it is off the enterprise network?).

The

third market transition is about changes to the workplace experience.

Borders are blurring in the organization between consumers and workers

and between the various functions within the organization. The borders

between the company and its partners, customers, and suppliers, are also

fading. As a result, the network is experiencing increasing demand to

connect anyone, any device, anywhere, at any time.

These

changes represent a challenge to security teams within the

organization. These teams now need to manage noncontrolled consumer

devices, such as a personal tablet, coming into the network, and provide

seamless and context-aware services to users all over the world. The

location of the data and services accessed by the users is almost

irrelevant. The data could be internal to the organization or it could

be in the cloud. This situation makes protecting data and services a

challenging proposition.

NOTE

Readers

interested in staying current with Network Security trends and

technologies could subscribe to some of the numerous podcasts available

on iTunes, such as:

- Cisco Interactive Network TechWiseTV

- Security Now!

- Security Wire Weekly

- Silver Bullet Security

- Crypto-Gram Security

Attacks

are increasingly politically and financially motivated, driven by

botnets, and aimed at critical infrastructure; for example:

- Botnets are used for spam, data theft, mail relays, or simply for denial-of-service attacks (ref: http://en.wikipedia.org/wiki/Botnet).

- Zeus botnets reached an estimated 3.6 million bots, infected workstations, or “zombies” (ref: http://www.networkworld.com/news/2009/072209-botnets.html).

- Stuxnet was aimed at industrial systems.

- Malware is downloaded inadvertently from online marketplaces.

One

of the trends in threats is the exploitation of trust. Whether they are

creating malware that can subvert industrial processes or tricking

social network users into handing over login and password information,

cybercriminals have a powerful weapon at their disposal: the

exploitation of trust. Cybercriminals have become skilled at convincing

users that their infected links and URLs are safe to click, and that

they are someone the user knows and trusts. Hackers exploit the trust we

have in TinyURLs and in security warning banners. With stolen security

credentials, cybercriminals can freely interact with legitimate software

and systems.

Nowhere is this tactic more widespread

than within social networking, where cybercriminals continue to attract

victims who are willing to share information with people they believe

are known to them, with malware such as Koobface. One noticeable shift

in social engineering is that criminals are spending more time figuring

out how to assume someone’s identity, perhaps by generating emails from

an individual’s computer or social networking account. A malware-laden

email or scam sent by a “trusted person” is more likely to elicit a

click-through response than the same message sent by a stranger.

Threats

originating from countries outside of the United States are rapidly

increasing. Global annual spam volumes actually dropped in 2010, the

first time this has happened in the history of the Internet. However,

spammers are originating in increasingly varied locations and countries.

Money

muling is the practice of hiring individuals as “mules,” recruited by

handlers or “wranglers” to set up bank accounts, or even use their own

bank accounts, to assist in the transfer of money from the account of a

fraud victim to another location, usually overseas, via a wire transfer

or automated clearing house (ACH) transaction. Money mule operations

often involve individuals in multiple countries.

Web

malware is definitely on the rise. The number of distinct domains that

are compromised to download malware to connecting users is increasing

dramatically. The most dangerous aspect of this type of attack is the

fact that users do not need to do much to get infected. Many times, the

combination of malware on the website and vulnerabilities on web

browsers is enough to provoke infection just by connecting to the

website. The more popular the site, the higher the volume of potential

infection.

Recently there have been major shifts in the

compliance landscape. Although enforcement of existing regulations has

been weak in many jurisdictions worldwide, regulators and standards

bodies are now tightening enforcement through expanded powers, higher

penalties, and harsh enforcement actions. In the future it will be more

difficult to hide failures in information security wherever

organizations do business. Legislators are forcing transparency through

the introduction of breach notification laws in Europe, Asia, and North

America as data breach disclosure becomes a global principle.

As

more regulations are introduced, there is a trend toward increasingly

prescriptive rules. For example, recent amendments introduced in the

United Kingdom in 2011 bring arguably more prescriptive information

protection regulations to the Privacy and Electronic Communications

Directive. Such laws are discussed in more detailed later in this

chapter. Any global enterprise that does business in the United Kingdom

today will likely be covered by these regulations. Lately, regulators

are also making it clear that enterprises are responsible for ensuring

the protection of their data when it is being processed by a business

partner, including cloud service providers. The new era of compliance

creates formidable challenges for organizations worldwide.

For

many organizations, stricter compliance could help focus management

attention on security, but if managers take a “check-list approach” to

compliance, it will detract from actually managing risk and may not

improve security. The new compliance landscape will increase costs and

risks. For example, it takes time and resources to substantiate

compliance. Increased requirements for service providers give rise to

more third-party risks.

With more transparency, there

are now greater consequences for data breaches. For example, expect to

see more litigation as customers and business partners seek compensation

for compromised data. But the harshest judgments will likely come from

the court of public opinion, with the potential to permanently damage an

enterprise’s reputation.

The following are some of the U.S. and international regulations that many companies are subject to:

- Sarbanes-Oxley (SOX)

- Federal Information Security Management Act (FISMA)

- Gramm-Leach-Bliley Act (GLBA)

- Payment Card Industry Data Security Standard (PCI DSS)

- Health Insurance Portability and Accountability Act (HIPAA)

- Digital Millennium Copyright Act (DMCA)

- Personal Information Protection and Electronic Documents Act (PIPEDA) in Canada

- European Union Data Protection Directive (EU 95/46/EC)

- Safe Harbor Act - European Union and United States

- International Convergence of Capital Measurement and Capital Standards (Basel II)

- The challenge becomes to comply with these regulations and, at the same time, make that compliance translate into an effective security posture.

Adversaries, Methodologies, and Classes of Attack

Who

are hackers? What motivates them? How do they conduct their attacks?

How do they manage to breach the measures we have in place to ensure

confidentiality, integrity, and availability? Which best practices can

we adopt to defeat hackers? These are some of the questions we try to

answer in this section.

People are social beings, and

it is quite common for systems to be compromised through social

engineering. Harm can be caused by people just trying to be “helpful.”

For example, in an attempt to be helpful, people have been known to give

their passwords over the phone to attackers who have a convincing

manner and say they are troubleshooting a problem and need to test

access using a real user password. End users must be trained, and

reminded, that the ultimate security of a system depends on their

behavior.

Of course, people often cause harm within

organizations intentionally: most security incidents are caused by

insiders. Thus, strong internal controls on security are required, and

special organizational practices might need to be implemented.

An

example of a special organizational practice that helps to provide

security is the separation of duty, where critical tasks require two or

more persons to complete them, thereby reducing the risk of insider

threat. People are less likely to attack or misbehave if they are

required to cooperate with others.

Unfortunately, users

frequently consider security too difficult to understand. Software

often does not make security options or decisions easy for end users.

Also, users typically prefer “whatever” functionality to no

functionality. Implementation of security measures should not create an

internally generated DoS, meaning, if security is too stringent or too

cumbersome for users, either they will not have access to all the

resources needed to perform their work or their performance will be

hindered by the security operations.

Adversaries

To

defend against attacks on information and information systems,

organizations must begin to define the threat by identifying potential

adversaries. These adversaries can include the following:

- Nations or states

- Terrorists

- Criminals

- Hackers

- Corporate competitors

- Disgruntled employees

Government agencies, such as the National Security Agency (NSA) and the Federal Bureau of Investigations (FBI)

Hackers

comprise the most well-known outside threat to information systems.

They are not necessarily geniuses, but they are persistent people who

have taken a lot of time to learn their craft.

Many titles are assigned to hackers:

Hackers:

Hackers are computer enthusiasts who break into networks and systems to

learn more about them. Some hackers generally mean no harm and do not

expect financial gain. Unfortunately, hackers may unintentionally pass

valuable information on to people who do intend to harm the system.

Hackers are subdivided into the following categories:

- White hat (ethical hacker)

- Blue hat (bug tester)

- Gray hat (ethically questionable hacker)

- Black hat (unethical hacker)

Crackers

(criminal hackers): Crackers are hackers with a criminal intent to harm

information systems. Crackers are generally working for financial gain

and are sometimes called black hat hackers.

Phreakers (phone

breakers): Phreakers pride themselves on compromising telephone systems.

Phreakers reroute and disconnect telephone lines, sell wiretaps, and

steal long-distance services.

NOTE

When

describing individuals whose intent is to exploit a network

maliciously, these individuals are often incorrectly referred to as

hackers. In this section, the term hacker is used, but might refer to

someone more correctly referred to as a cracker, or black hat hacker.

Script

kiddies: Script kiddies think of themselves as hackers, but have very

low skill levels. They do not write their own code; instead, they run

scripts written by other, more skilled attackers.

Hacktivists:

Hacktivists are individuals who have a political agenda in doing their

work. When government websites are defaced, this is usually the work of a

hacktivist.

Methodologies

The goal of any hacker

is to compromise the intended target or application. Hackers begin with

little or no information about the intended target, but by the end of

their analysis, they have accessed the network and have begun to

compromise their target. Their approach is usually careful and

methodical, not rushed and reckless. The seven-step process that follows

is a good representation of the methods that hackers use:

- Step 1. Perform footprint analysis (reconnaissance).

- Step 2. Enumerate applications and operating systems.

- Step 3. Manipulate users to gain access.

- Step 4. Escalate privileges.

- Step 5. Gather additional passwords and secrets.

- Step 6. Install back doors.

- Step 7. Leverage the compromised system.

CAUTION

Hackers

have become successful by thinking “outside the box.” This methodology

is meant to illustrate the steps that a structured attack might take.

Not all hackers will follow these steps in this order.

To

successfully hack into a system, hackers generally first want to know

as much as they can about the system. Hackers can build a complete

profile, or “footprint,” of the company security posture. Using a range

of tools and techniques, an attacker can discover the company domain

names, network blocks, IP addresses of systems, ports and services that

are used, and many other details that pertain to the company security

posture as it relates to the Internet, an intranet, remote access, and

an extranet. By following some simple advice, network administrators can

make footprinting more difficult.

After hackers have

completed a profile, or footprint, of your organization, they use tools

such as those in the list that follows to enumerate additional

information about your systems and networks. All these tools are readily

available to download, and the security staff should know how these

tools work. Additional tools (introduced later in the “Security Testing

Techniques” section) can also be used to gather information and

therefore hack.

- Netcat: Netcat is a featured networking utility that reads and writes data across network connections.

- Microsoft EPDump and Microsoft Remote Procedure Call (RPC) Dump: These tools provide information about Microsoft RPC services on a server.

- GetMAC: This application provides a quick way to find the MAC (Ethernet) layer address and binding order for a computer running Microsoft Windows locally or across a network.

- Software development kits (SDK): SDKs provide hackers with the basic tools that they need to learn more about systems.

Another

common technique that hackers use is to manipulate users of an

organization to gain access to that organization. There are countless

cases of unsuspecting employees providing information to unauthorized

people simply because the requesters appear innocent or to be in a

position of authority. Hackers find names and telephone numbers on

websites or domain registration records by footprinting. Hackers then

directly contact these people by phone and convince them to reveal

passwords. Hackers gather information without raising any concern or

suspicion. This form of attack is called social engineering. One form of

a social engineering attack is for the hacker to pose as a visitor to

the company, a delivery person, a service technician, or some other

person who might have a legitimate reason to be on the premises and,

after gaining entrance, walk by cubicles and look under keyboards to see

whether anyone has put a note there containing the current password.

The

next thing the hacker typically does is review all the information that

they have collected about the host, searching for usernames, passwords,

and Registry keys that contain application or user passwords. This

information can help hackers escalate their privileges on the host or

network. If reviewing the information from the host does not reveal

useful information, hackers may launch a Trojan horse attack in an

attempt to escalate their privileges on the host. This type of attack

usually means copying malicious code to the user system and giving it

the same name as a frequently used piece of software.

After

the hacker has obtained higher privileges, the next task is to gather

additional passwords and other sensitive data. The targets now include

such things as the local security accounts manager database or the

Active Directory of a domain controller. Hackers use legitimate tools

such as pwdump and lsadump applications to gather passwords from

machines running Windows, which then can be cracked with the very

popular Cain & Abel software tool. By cross-referencing username and

password combinations, the hacker is able to obtain administrative

access to all the computers in the network.

If hackers

are detected trying to enter through the “front door,” or if they want

to enter the system without being detected, they try to use “back doors”

into the system. A back door is a method of bypassing normal

authentication to secure remote access to a computer while attempting to

remain undetected. The most common backdoor point is a listening port

that provides remote access to the system for users (hackers) who do not

have, or do not want to use, access or administrative privileges.

After

hackers gain administrative access, they enjoy hacking other systems on

the network. As each new system is hacked, the attacker performs the

steps that were outlined previously to gather additional system and

password information. Hackers try to scan and exploit a single system or

a whole set of networks and usually automate the whole process.

In addition, hackers will cover their tracks either by deleting log entries or falsifying them.

Thinking Outside the Box

In

2005, David Sternberg hacked the Postal Bank in Israel by physically

breaking into one of the bank’s branches in Haifa and connecting a

wireless access point in the branch’s IT infrastructure. Sternberg

rented office space about 100 feet from the bank and proceeded to

transfer funds to bank accounts in his name or in friends’ names.

So

instead of trying for months to break into the IT security of the bank,

Sternberg thought outside of the box and broke through physical

security to gain access to the IT system.

Sternberg was

discovered when bank auditors noticed regular transfers from the main

bank account to the same individual accounts.

I guess that Sternberg had not heard about the security axiom that says “predictability is the enemy of security.”

A

common thread in infosec forums is that information security

specialists must patch all security holes in a network—a hacker only has

to find the one that wasn’t patched. Security is like a chain. It is

only as strong as its weakest link.

Threats Classification

In

classifying security threats, it is common to find general categories

that resemble the perspective of the attacker and the approaches that

are used to exploit software. Attack patterns are a powerful mechanism

to capture and communicate the perspective of the attacker. These

patterns are descriptions of common methods for exploiting

vulnerabilities. The patterns derive from the concept of design patterns

that are applied in a destructive rather than constructive context and

are generated from in-depth analysis of specific, real-world exploit

examples. The following list illustrates examples of threat categories

that are based on this criterion. Notice that some threats are not

malicious attacks. Examples of nonmalicious threats include forces of

nature such as hurricanes and earthquakes.

Later in this chapter, you learn about some of the general categories under which threats can be regrouped, such as:

- Enumeration and fingerprinting

- Spoofing and impersonation

- Man-in-the-middle

- Overt and covert channels

- Blended threats and malware

- Exploitation of privilege and trust

- Confidentiality

- Password attacks

- Availability attacks

- Denial of service (DoS)

- Botnet

- Physical security attacks

- Forces of nature

To

assist in enhancing security throughout the security lifecycle, there

are many publicly available classification databases that provide a

catalog of attack patterns and classification taxonomies. They are aimed

at providing a consistent view and method for identifying, collecting,

refining, and sharing attack patterns for specific communities of

interest. The following are four of the most prominent databases:

- Common Attack Pattern Enumeration and Classification (CAPEC): Sponsored by the U.S. Department of Homeland Security as part of the software assurance strategic initiative of the National Cyber Security Division, the objective of this effort is to provide a publicly available catalog of attack patterns along with a comprehensive schema and classification taxonomy. More information can be found at http://capec.mitre.org.

- Open Web Application Security Project (OWASP) Application Security Verification Standard (ASVS): OWASP is a not-for-profit worldwide charitable organization focused on improving the security of application software. The primary objective of ASVS is to normalize the range in the coverage and level of rigor available in the market when it comes to performing web application security verification using a commercially workable open standard. More information can be found at https://www.owasp.org.

- Web Application Security Consortium Threat Classification (WASC TC): Sponsored by the WASC, this is a cooperative effort to clarify and organize the threats to the security of a website. The project is aimed at developing and promoting industry-standard terminology for describing these issues. Application developers, security professionals, software vendors, and compliance auditors have the ability to access a consistent language and definitions for web security-related issues. More information can be found at https://www.psss4sures.com.

- Malware Attribute Enumeration and Characterization (MAEC): Created by MITRE, this effort is international in scope and free for public use. MAEC is a standardized language for encoding and communicating high-fidelity information about malware based on attributes such as behaviors, artifacts, and attack patterns. More information can be found at http://maec.mitre.org.

Enumeration and Fingerprinting with Ping Sweeps and Port Scans

Enumeration

and fingerprinting are types of attacks that use legitimate tools for

illegitimate purposes. Some of the tools, such as port-scan and

ping-sweep applications, run a series of tests against hosts and devices

to identify vulnerable services that need attention. IP addresses and

port or banner data from both TCP and UDP ports are examined to gather

information.

In an illegitimate situation, a port scan

is a series of messages sent by someone attempting to break into a

computer to learn which computer network services (each service is

associated with a well-known port number) the computer provides. Port

scanning can be automated to scan a range of TCP or UDP port numbers on a

host to detect listening services. Port scanning, a favorite computer

hacker approach, provides information to the hacker about where to probe

for weaknesses. Essentially, a port scan consists of sending a message

to each port, one at a time. The kind of response received indicates

whether the port is being used and needs further probing.

A

ping sweep, also known as an Internet Control Message Protocol (ICMP)

sweep, is a basic network-scanning technique that is used to determine

which IP addresses map to live hosts (computers). A ping sweep consists

of ICMP echo-requests (pings) sent to multiple hosts, whereas a single

ping consists of ICMP echo-requests that are sent to one specific host

computer. If a given address is live, that host returns an ICMP

echo-reply. The goal of the ping sweep is to find hosts available on the

network to probe for vulnerabilities. Ping sweeps are among the oldest

and slowest methods that are used to scan a network.

IP Spoofing Attacks

The

prime goal of an IP spoofing attack is to establish a connection that

allows the attacker to gain root access to the host and to create a

backdoor entry path into the target system.

IP spoofing

is a technique used to gain unauthorized access to computers whereby

the intruder sends messages to a computer with an IP address that

indicates the message is coming from a trusted host. The attacker learns

the IP address of a trusted host and modifies the packet headers so

that it appears that the packets are coming from that trusted host.

At

a high level, the concept of IP spoofing is easy to comprehend. Routers

determine the best route between distant computers by examining the

destination address, and ignore the source address. In a spoofing

attack, an attacker outside your network pretends to be a trusted

computer by using a trusted internal or external IP address.

If

an attacker manages to change the routing tables to divert network

packets to the spoofed IP address, the attacker can receive all the

network packets addressed to the spoofed address and reply just as any

trusted user can.

IP spoofing can also provide access

to user accounts and passwords. For example, an attacker can emulate one

of your internal users in ways that prove embarrassing for your

organization. The attacker could send email messages to business

partners that appear to have originated from someone within your

organization. Such attacks are easier to perpetrate when an attacker has

a user account and password, but they are also possible when attackers

combine simple spoofing attacks with their knowledge of messaging

protocols.

A rudimentary use of IP spoofing also

involves bombarding a site with IP packets or ping requests, spoofing a

source, a third-party registered public address. When the destination

host receives the requests, it responds to what appears to be a

legitimate request. If multiple hosts are attacked with spoofed

requests, their collective replies to the third-party spoofed IP address

create an unsupportable flood of packets, thus creating a DoS attack.

Technical Discussion of IP Spoofing

TCP/IP

works at Layer 3 and Layer 4 of the Open Systems Interconnection (OSI)

model, IP at Layer 3 and TCP at Layer 4. IP is a connectionless model,

which means that packet headers do not contain information about the

transaction state that is used to route packets on a network. There is

no method in place to ensure proper delivery of a packet to the

destination, since at Layer 3, there is no acknowledgement sent back to

the source by the destination once it has received the packet.

The

IP header contains the source and destination IP addresses. Using one

of several tools, an attacker can easily modify the source address

field. Note that in IP each datagram is independent of all others

because of the stateless nature of IP. To engage in IP spoofing, hackers

find the IP address of a trusted host and modify their own packet

headers to appear as though packets are coming from that trusted host

(source address).

TCP uses a connection-oriented

design. This design means that the participants in a TCP session must

first build a connection using the three-way handshake, as shown in

Figure 1-4.

Figure 1-4

Figure 1-4. TCP Three-Way Handshake

After

the connection is established, TCP ensures data reliability by applying

the same process to every packet as the two machines update one another

on progress. The sequence and acknowledgments take place as follows:

The client selects and transmits an initial sequence number.

The server acknowledges the initial sequence number and sends its own sequence number.

The client acknowledges the server sequence number, and the connection is open to data transmission.

Sequence Prediction

The

basis of IP spoofing during a TCP communication lies in an inherent

security weakness known as sequence prediction. Hackers can guess or

predict the TCP sequence numbers that are used to construct a TCP packet

without receiving any responses from the server. Their prediction

allows them to spoof a trusted host on a local network. To mount an IP

spoofing attack, the hacker listens to communications between two

systems. The hacker sends packets to the target system with the source

IP address of the trusted system, as shown in Figure 1-5.

Figure 1-5

Figure 1-5. Sequence Number Prediction

If

the packets from the hacker have the sequence numbers that the target

system is expecting, and if these packets arrive before the packets from

the real, trusted system, the hacker becomes the trusted host.

To

engage in IP spoofing, hackers must first use a variety of techniques

to find an IP address of a trusted host and then modify their packet

headers to appear as though packets are coming from that trusted host.

Further, the attacker can engage other unsuspecting hosts to generate

traffic that appears as though it too is coming from the trusted host,

thus flooding the network.

Trust Exploitation

Trust exploitation refers to an individual taking advantage of a trust relationship within a network.

As

an example of trust exploitation, consider the network shown in Figure

1-6, where system A is in the demilitarized zone (DMZ) of a firewall.

System B, located in the inside of the firewall, trusts System A. When a

hacker on the outside network compromises System A in the DMZ, the

attacker can leverage the trust relationship it has to gain access to

System A.

Figure 1-6

Figure 1-6. Trust Exploitation

A

DMZ can be seen as a semi-secure segment of your network. A DMZ is

typically used to provide to outside users access to corporate

resources, because these users are not allowed to reach inside servers

directly. However, a DMZ server might be allowed to reach inside

resources directly. In a trust exploitation attack, a hacker could hack a

DMZ server and use it as a springboard to reach the inside network.

Several trust models may exist in a network:

- Windows

- Domains

- Active Directory

- Linux and UNIX

- Network File System (NFS)

- Network Information Services Plus (NIS+)

- Password Attacks

Password

attacks can be implemented using several methods, including brute-force

attacks, Trojan horse programs, IP spoofing, keyloggers, packet

sniffers, and dictionary attacks. Although packet sniffers and IP

spoofing can yield user accounts and passwords, password attacks usually

refer to repeated attempts to identify a user account, password, or

both. These repeated attempts are called brute-force attacks.

To

execute a brute-force attack, an attacker can use a program that runs

across the network and attempts to log in to a shared resource, such as a

server. When an attacker gains access to a resource, the attacker has

the same access rights as the rightful user. If this account has

sufficient privileges, the attacker can create a back door for future

access, without concern for any status and password changes to the

compromised user account.

Just as with packet sniffers

and IP spoofing attacks, a brute-force password attack can provide

access to accounts that attackers then use to modify critical network

files and services. For example, an attacker compromises your network

integrity by modifying your network routing tables. This trick reroutes

all network packets to the attacker before transmitting them to their

final destination. In such a case, an attacker can monitor all network

traffic, effectively becoming a man in the middle.

Passwords

present a security risk if they are stored as plain text. Thus,

passwords must be encrypted in order to avoid risks. On most systems,

passwords are processed through an encryption algorithm that generates a

one-way hash on passwords. You cannot reverse a one-way hash back to

its original text. Most systems do not decrypt the stored password

during authentication; they store the one-way hash. During the login

process, you supply an account and password, and the password encryption

algorithm generates a one-way hash. The algorithm compares this hash to

the hash stored on the system. If the hashes are the same, the

algorithm assumes that the user supplied the proper password.

Remember

that passing the password through an algorithm results in a password

hash. The hash is not the encrypted password, but rather a result of the

algorithm. The strength of the hash is such that the hash value can be

re-created only by using the original user and password information, and

that it is impossible to retrieve the original information from the

hash. This strength makes hashes perfect for encoding passwords for

storage. In granting authorization, the hashes, rather than the

plain-text password, are calculated and compared.

Hackers use many tools and techniques to crack passwords:

- Word lists: These programs use lists of words, phrases, or other combinations of letters, numbers, and symbols that computer users often use as passwords. Hackers enter word after word at high speed (called a dictionary attack) until they find a match.

- Brute force: This approach relies on power and repetition. It compares every possible combination and permutation of characters until it finds a match. Brute force eventually cracks any password, but it might take a long, long time. Brute force is an extremely slow process because it uses every conceivable character combination.

- Hybrid crackers: Some password crackers mix the two techniques. This combines the best of both methods and is highly effective against poorly constructed passwords.

Password cracking attacks any application or service that accepts user authentication, including the following:

- NetBIOS over TCP (TCP 139)

- Direct host (TCP 445)

- FTP (TCP 21)

- Telnet (TCP 23)

- Simple Network Management Protocol (SNMP) (UDP 161)

- Point-to-Point Tunneling Protocol (PPTP) (TCP 1723)

- Terminal services (TCP 3389)

NOTE

RainbowCrack

is a compilation of hashes that provides crackers with a list that they

can use to attempt to match hashes that they capture with sniffers.

Confidentiality and Integrity Attacks

Confidentiality

breaches can occur when an attacker attempts to obtain access to

read-sensitive data. These attacks can be extremely difficult to detect

because the attacker can copy sensitive data without the knowledge of

the owner and without leaving a trace.

A

confidentiality breach can occur simply because of incorrect file

protections. For instance, a sensitive file could mistakenly be given

global read access. Unauthorized copying or examination of the file

would probably be difficult to track without having some type of audit

mechanism running that logs every file operation. If a user had no

reason to suspect unwanted access, however, the audit file would

probably never be examined.

In Figure 1-7, the attacker

is able to compromise an exposed web server. Using this server as a

beachhead, the attacker then gains full access to the database server

from which customer data is downloaded. The attacker then uses

information from the database, such as a username, password, and email

address, to intercept and read sensitive email messages destined for a

user in the branch office. This attack is difficult to detect because

the attacker did not modify or delete any data. The data was only read

and downloaded. Without some kind of auditing mechanism on the server,

it is unlikely that this attack will be discovered.

Figure 1-7

Figure 1-7. Breach of Confidentiality

Attackers can use many methods to compromise confidentiality, the most common of which are as follows:

- Ping sweeps and port scanning: Searching a network host for open ports.

- Packet sniffing: Intercepting and logging traffic that passes over a digital network or part of a network.

- Emanations capturing: Capturing electrical transmissions from the equipment of an organization to deduce information regarding the organization.

- Overt channels: Listening on obvious and visible communications. Overt channels can be used for covert communication.

- Covert channels: Hiding information within a transmission channel that is based on encoding data using another set of events.

- Wiretapping: Monitoring the telephone or Internet conversations of a third party, often covertly.

- Social engineering: Using social skills or relationships to manipulate people inside the network to provide the information needed to access the network.

- Dumpster diving: Searching through company dumpsters or trash cans looking for information, such as phone books, organization charts, manuals, memos, charts, and other documentation that can provide a valuable source of information for hackers.

- Phishing: Attempting to criminally acquire sensitive information, such as usernames and passwords, by masquerading as trustworthy entities.

- Pharming: Redirecting the traffic of a website to another, rogue website.

Many

of these methods are used to compromise more than confidentiality. They

are often elements of attacks on integrity and availability.

Man-in-the-Middle Attacks

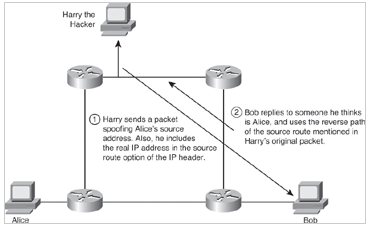

A

complex form of IP spoofing is called man-in-the-middle attack, where

the hacker monitors the traffic that comes across the network and

introduces himself as a stealth intermediary between the sender and the

receiver, as shown in Figure 1-8.

Figure 1-8

Figure 1-8. IP Source Routing Attack

Hackers use man-in-the-middle attacks to perform many security violations:

- Theft of information

- Hijacking of an ongoing session to gain access to your internal network resources

- Analysis of traffic to derive information about your network and its users

- DoS

- Corruption of transmitted data

- Introduction of new information into network sessions

Attacks

are blind or nonblind. A blind attack interferes with a connection that

takes place from outside, where sequence and acknowledgment numbers are

unreachable. A nonblind attack interferes with connections that cross

wiring used by the hacker. A good example of a blind attack can be found

at http://wiki.cas.mcmaster.ca/index.php/The_Mitnick_attack.

TCP

session hijacking is a common variant of the man-in-the-middle attack.

The attacker sniffs to identify the client and server IP addresses and

relative port numbers. The attacker modifies his or her packet headers

to spoof TCP/IP packets from the client, and then waits to receive an

ACK packet from the client communicating with the server. The ACK packet

contains the sequence number of the next packet that the client is

expecting. The attacker replies to the client using a modified packet

with the source address of the server and the destination address of the

client. This packet results in a reset that disconnects the legitimate

client. The attacker takes over communications with the server by

spoofing the expected sequence number from the ACK that was previously

sent from the legitimate client to the server. (This could also be an

attack against confidentiality.)

Another cleaver

man-in-the-middle attack is for the hacker to successfully introduce

himself as the DHCP server on the network, providing its own IP address

as the default gateway during the DHCP offer.

NOTE

At

this point, having read about many different attacks, you might be

concerned that the security of your network is insufficient. Do not